Tensors are the fundamental building blocks in deep learning and modern machine learning. They are multi-dimensional arrays that allow for complex data representations, computations, and transformations. This guide will provide an overview of tensors, their operations, and their applications in machine learning.

What Are Tensors?

Tensors generalize scalars, vectors, and matrices to higher dimensions. They are multi-dimensional arrays used to store and manipulate data in various dimensions.

Examples of Tensors

- Scalar: A single value (0-dimensional tensor). s=5

- Vector: A 1-dimensional tensor. v=[1,2,3]

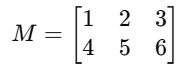

- Matrix: A 2-dimensional tensor.

- Higher-Order Tensor: A tensor with three or more dimensions. For example, a 3D tensor can represent RGB images.

Tensor Notation and Shapes

A tensor’s shape defines its dimensions. For instance:

- A scalar has shape ().

- A vector of size nn has shape (n).

- A matrix with mm rows and nn columns has shape (m,n).

- A 3D tensor has shape (d1,d2,d3).

In Python, libraries like NumPy and TensorFlow provide easy ways to work with tensors and query their shapes.

Tensor Operations

1. Basic Operations

Tensors support element-wise addition, subtraction, multiplication, and division.

import numpy as np

# Define two tensors

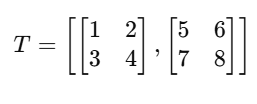

A = np.array([[1, 2], [3, 4]])

B = np.array([[5, 6], [7, 8]])

# Element-wise addition

C = A + B

print(C)2. Dot Product

The dot product is the sum of the products of corresponding elements.

# Dot product of two vectors

v1 = np.array([1, 2, 3])

v2 = np.array([4, 5, 6])

dot_product = np.dot(v1, v2)

print(dot_product)3. Matrix Multiplication

Matrix multiplication combines tensors to transform data or compute results.

# Matrix multiplication

result = np.matmul(A, B)

print(result)4. Broadcasting

Smaller tensors are automatically expanded to match the shape of larger tensors during operations.

5. Tensor Transpose

Switches rows and columns for a 2D tensor.

A_transposed = np.transpose(A)

print(A_transposed)6. Reshaping

Tensors can be reshaped into different dimensions without changing their data.

reshaped = A.reshape(4, 1)

print(reshaped)Tensors in Machine Learning

Tensors enable efficient computations and transformations for machine learning algorithms.

Applications

- Neural Networks:

- Represent weights, biases, and activations.

- Perform matrix multiplications in each layer.

- Image Processing:

- RGB images are represented as 3D tensors with dimensions (height,width,channels)(height, width, channels).

- Natural Language Processing (NLP):

- Words and sentences are encoded as tensors for processing by models.

- Audio Processing:

- Audio signals are represented as 1D tensors for frequency analysis.

- Scientific Computing:

- Tensor operations are used in simulations and optimization problems.

Tensor Libraries

1. NumPy

A powerful library for basic tensor operations.

import numpy as np

tensor = np.array([1, 2, 3])

print(tensor.shape)2. TensorFlow

Designed for deep learning, TensorFlow provides extensive support for tensors.

import tensorflow as tf

tensor = tf.constant([[1, 2], [3, 4]])

print(tensor.shape)3. PyTorch

Popular for its dynamic computation graph and tensor manipulation.

import torch

tensor = torch.tensor([[1, 2], [3, 4]])

print(tensor.size())Benefits of Using Tensors

- Efficiency: Optimized for parallel computations on GPUs.

- Flexibility: Can represent complex data structures like images and time-series data.

- Scalability: Handle high-dimensional data effectively.

- Integration: Supported by all major deep learning frameworks.

Learning Resources

- Books:

- Deep Learning by Ian Goodfellow et al.

- Python Machine Learning by Sebastian Raschka.

- Courses:

- Introduction to TensorFlow for Artificial Intelligence (Coursera).

- PyTorch Fundamentals (Udacity).

- Online Resources:

- Official documentation for TensorFlow and PyTorch.