Once a perceptron has been trained, the next step is to test its performance. Testing ensures the perceptron can generalize and produce accurate predictions on unseen data. In this article, we will explore the process of testing a perceptron, provide implementation details, and highlight best practices. Learn more about perceptrons and neural networks at The Coding College.

What Does Testing a Perceptron Involve?

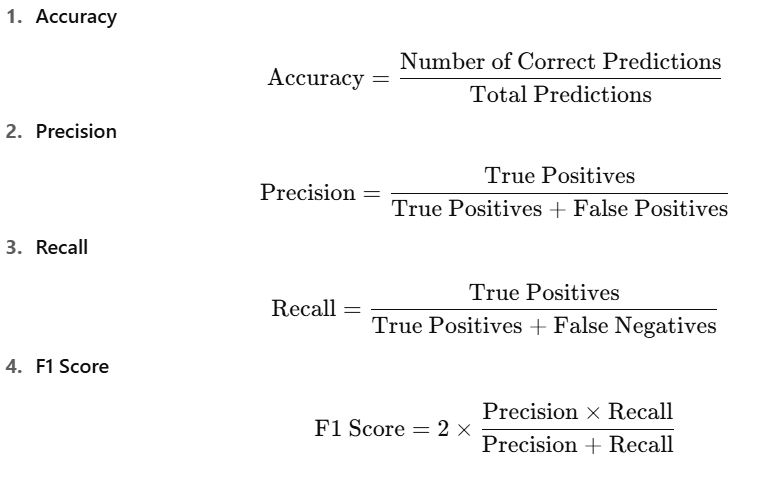

Testing a perceptron involves feeding it input data and evaluating its output against the expected results. The performance of the perceptron is quantified using metrics such as accuracy, precision, recall, and F1 score.

Steps for Testing a Perceptron

- Prepare the Test Data

- Ensure the test data is distinct from the training data.

- The data should be labeled for supervised evaluation.

- Feed Input to the Perceptron

- Apply the input data to the perceptron, using the trained weights and bias to calculate the output.

- Compare Predicted and Actual Outputs

- Use the perceptron’s output (y^\hat{y}) and compare it with the true labels (yy).

- Evaluate Performance

- Use evaluation metrics to assess the perceptron’s accuracy and generalization ability.

Testing a Perceptron for Binary Classification

Example Dataset: OR Gate

We will test a perceptron trained on an OR gate problem.

| Input (X1,X2) | Expected Output (y) |

|---|---|

| (0, 0) | 0 |

| (0, 1) | 1 |

| (1, 0) | 1 |

| (1, 1) | 1 |

Python Implementation for Testing

import numpy as np

# Step Activation Function

def step_function(z):

return 1 if z >= 0 else 0

# Perceptron Class

class Perceptron:

def __init__(self, learning_rate=0.1, epochs=10):

self.lr = learning_rate

self.epochs = epochs

self.weights = None

self.bias = None

def fit(self, X, y):

n_samples, n_features = X.shape

self.weights = np.zeros(n_features)

self.bias = 0

for _ in range(self.epochs):

for idx, x_i in enumerate(X):

z = np.dot(x_i, self.weights) + self.bias

y_pred = step_function(z)

error = y[idx] - y_pred

# Update weights and bias

self.weights += self.lr * error * x_i

self.bias += self.lr * error

def predict(self, X):

z = np.dot(X, self.weights) + self.bias

return np.array([step_function(i) for i in z])

# Dataset: OR Gate

X_train = np.array([[0, 0], [0, 1], [1, 0], [1, 1]])

y_train = np.array([0, 1, 1, 1])

# Train Perceptron

perceptron = Perceptron(learning_rate=0.1, epochs=10)

perceptron.fit(X_train, y_train)

# Testing Data

X_test = np.array([[0, 0], [1, 1], [0, 1], [1, 0]])

y_test = np.array([0, 1, 1, 1])

# Predict and Evaluate

y_pred = perceptron.predict(X_test)

print("Predictions:", y_pred)

# Calculate Accuracy

accuracy = np.sum(y_pred == y_test) / len(y_test)

print(f"Accuracy: {accuracy * 100:.2f}%")Evaluation Metrics

Best Practices for Testing a Perceptron

- Split Data Properly

- Use separate training and testing datasets to avoid overfitting.

- Normalize Data

- Ensure input data is scaled appropriately for better performance.

- Use Cross-Validation

- Apply k-fold cross-validation for robust evaluation.

- Analyze Errors

- Investigate misclassified data to identify potential improvements in the model or features.

Advanced Testing Scenarios

For more complex datasets:

- Use multi-layer perceptrons (MLPs) for non-linearly separable data.

- Employ visualization tools to inspect decision boundaries and classification results.